Microscope-based augmented reality (AR) can be applied successfully to various kinds of spinal procedures, and improves anatomical orientation during surgery, as well as offering potential as a tool for education, a study published in the Global Spine Journal has concluded. The study, authored by Barbara Carl (University Marburg, Marburg, Germany) investigated how microscope-based AR support can be utilised within spine surgery.

The study looked at 42 spinal procedures, including 12 intra- and eight extradural tumours, seven other intradural lesions, 11 degenerative cases, two infections, and two deformities, all of which involved AR implemented using operating microscope head-up displays (HUDs).

Among the challenges noted in the study is the flexibility of the spine, and the impact that this has on the registration process. Carl and colleagues write: “Preoperative images, which are regularly used to define the structures that are visualised by AR in cranial procedures, usually do not reflect the actual 3D anatomy and alignment of the spine, since patient positioning on the operating room table and during preoperative imaging differs, so the alignment of the vertebra does not match.”

To address this study team implemented a combination of intraoperative computed tomography (iCT)-based automatic patient registration and non-linear image registration to minimise overall registration errors when applying AR for spinal surgery. Preoperative image data were used for anatomical mapping (Brainlab) to automatically segment the vertebra with each vertebra assigned a unique colour. Additional manual segmentation was applied to correct the results of automatic segmentation in case of erroneous level assignment and insufficient 3D outlining.

The study team explains that in case preoperative multimodality data were integrated, to begin with these data were rigidly registered, but, if this image fusion showed a mismatch in the area of interest due to the flexibility of the spine resulting, for example, in a changed sagittal alignment between the different imaging time points, non-linear image fusion was performed. The final alignment was mainly checked by observing whether the segmented outlines of the vertebra matched closely.

The patient was positioned in supine, lateral, or prone position on the operating table of a 32-slice, movable CT scanner (Brainlab). Registration scanning was performed after performing the surgical approach to the spine and retractor placement to prevent positional shifting due to the approach preparation. After the iCT images were sent to the navigation system, automatic registration took place and registration accuracy was documented applying a navigation pointer selecting characteristic anatomical and artificial landmarks.

After automatic registration was checked, the HUD of the operating microscopes (Pentero or Pentero 900, Zeiss) was calibrated by centring the microscope over the registration array so that the microscope crosshair pointed into the central divot of the registration array. Then the displayed outline of the registration array could be checked with the real outline of the array and adjusted if necessary, to achieve an optimal matching.

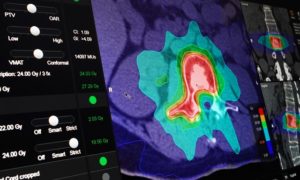

Using the HUD for AR provided two different display modes, objects were either displayed in a line-mode or as a 3D representation. The line-mode representation depicted a solid line showing the extent of the object perpendicular to the viewing axis of the microscope in the focal plane in combination with a dotted line showing the maximum extent of the object beyond the focal plane. The 3D representation visualised the objects as 3D objects in a semi-transparent way with contours in varying thickness giving an impression on the 3D shape.

Discussing the results, the study team found that AR could be successfully applied in all 42 cases. Low-dose protocols ensured a low radiation exposure for registration scanning (effective dose cervical 0.29 ± 0.17 mSv, thoracic 3.40 ± 2.38 mSv, lumbar 3.05 ± 0.89 mSv) they write, and a low registration error rate (0.87 ± 0.28 mm) resulted in a reliable AR representation with a close matching of visualised objects and reality, distinctly supporting anatomical orientation in the surgical field. Flexible AR visualisation applying either the microscope HUD or video superimposition, including the ability to selectively activate objects of interest, as well as different display modes allowed a smooth integration in the surgical workflow, without disturbing the actual procedure they note. On average, 7.1 ± 4.6 objects were displayed visualising target and risk structures reliably.

Carl and colleagues write that there is potential for the application in a wide range of spinal procedures, noting: “AR demonstrated its benefit especially in challenging anatomical situations, like reoperations and complex anatomical situations, as well as it will be beneficial in aiding the training of residents for a quicker and better understanding of anatomy. All spinal procedures that are suitable for microscope usage are candidates for microscope HUD-based AR, independent of the spine region, patient positioning, and the approach to the spine.”